* Question

Sensor information fusion classification

* Answer

Sensor Information Fusion refers to the comprehensive processing of data from multiple sensors

to obtain more accurate, reliable, and useful information. Sensor fusion can be classified in

different ways based on the fusion levels, processing methods, and application fields. Here are

some common classifications:

1. Classification Based on Processing Levels

1. Data-Level Fusion

– Concept: Combines raw data from multiple sensors directly, often using statistical methods

or signal processing techniques.

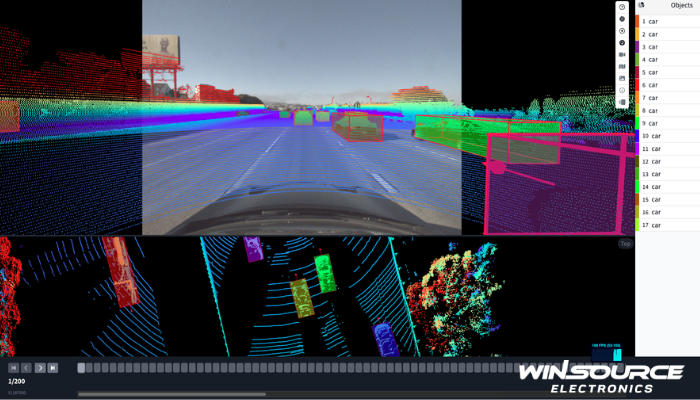

– Application: Used for low-level signal processing, such as image registration, point cloud data

fusion, etc.

2. Feature-Level Fusion

– Concept: Extracts features from multiple sensor data and then performs a comprehensive

analysis to obtain fusion results.

– Application: Commonly used in machine learning and pattern recognition fields, such as

speech recognition, image feature matching, etc.

3. Decision-Level Fusion

– Concept: Each sensor independently analyzes the data and makes partial decisions, which

are then combined to produce a final decision.

– Application: Suitable for systems requiring distributed decisions or multi-source information

confirmation, like fault diagnosis, multi-sensor environment monitoring, etc.

2. Classification Based on Fusion Methods

1. Probabilistic and Statistical Methods

– Concept: Utilizes probability theory and statistical methods to combine sensor data.

Common methods include Bayesian estimation, Kalman filtering, and Markov chains.

– Application: Used for multi-sensor tracking, navigation, and positioning.

2. Evidence Theory Methods

– Concept: Based on evidence theory (such as Dempster-Shafer theory) to handle uncertainty

and ambiguity.

– Application: Widely used in fault diagnosis and target recognition.

3. Neural Networks and Deep Learning Methods

– Concept: Employs neural networks and deep learning models to fuse sensor data and

perform pattern recognition, extracting more complex features and relationships.

– Application: Used in computer vision, autonomous driving, speech processing, etc.

4. Rule-Based and Logical Reasoning Methods

– Concept: Uses predefined rules and logical relationships to reason and fuse sensor

information.

– Application: Suitable for expert systems, intelligent monitoring, and other scenarios.

3. Classification Based on Time

1. Instantaneous Fusion

– Concept: Combines sensor data obtained at the same moment in time.

– Application: Suitable for static scene object recognition and state estimation, such as

obstacle detection in UAVs.

2. Time-Series Fusion

– Concept: Combines data over multiple time points to obtain dynamic information.

– Application: Used in dynamic systems like mobile robots and vehicle trajectory tracking.

4. Classification Based on Sensor Type

1. Homogeneous Sensor Fusion

– Concept: Combines data from the same type of sensors (e.g., multiple cameras or multiple

temperature sensors).

– Application: Suitable for improving measurement accuracy and reducing sensor noise.

2. Heterogeneous Sensor Fusion

– Concept: Combines data from different types of sensors (e.g., camera and LiDAR, or IMU and

GPS).

– Application: Widely used in autonomous driving, environmental perception, and other fields.

5. Classification Based on Spatial Distribution

1. Centralized Fusion

– Concept: All sensor data is sent to a central processing unit for comprehensive analysis.

– Advantages: Provides a global fusion result but can have higher communication and

processing overhead.

2. Distributed Fusion

– Concept: Each sensor independently processes and preliminarily analyzes its data, and then

the results are sent to a fusion node for the final fusion.

– Advantages: Reduces communication and processing pressure, improving the robustness of

the system.

These classification methods help to understand sensor information fusion in different

application scenarios and aid in designing appropriate fusion strategies. Each type of

classification caters to different fusion goals, data sources, and processing methods.

COMMENTS